Finding mushrooms with your mobile phone

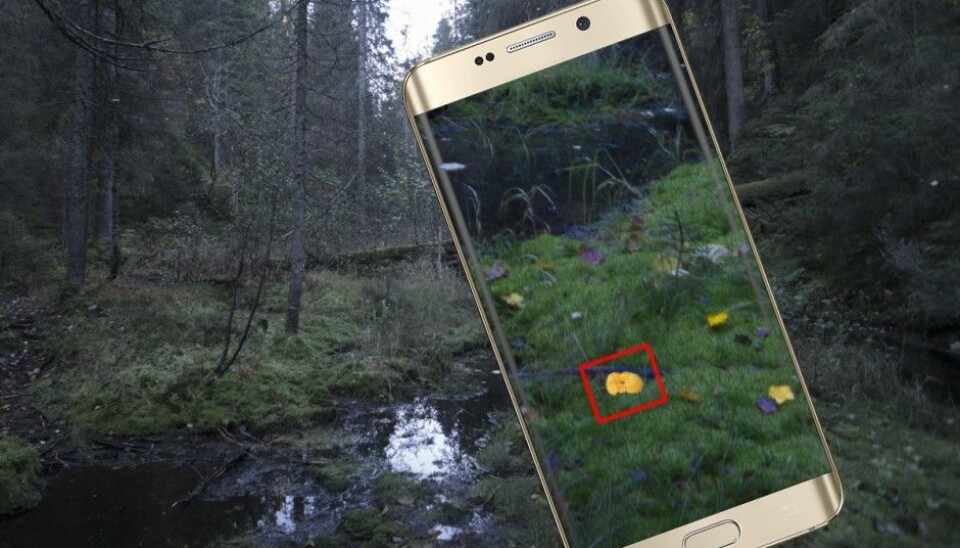

Advanced image recognition can scan the forest floor looking for good mushroom locations. And it’s not just a fantasy, say two Norwegian NTNU students.

How can neural networks and other methods detect the what’s in a picture? The answer — customized for mushroom season — is explained below.

An app that finds mushrooms? Not impossible, if your mobile has a camera that not only sees but can also interpret what it sees.

Image recognition is already a big industry. Facebook recognizes your friends in pictures you post. Cameras have smart shutters that click automatically when you smile.

Imagining an app

Now two NTNU students, Stian Jensen and Andreas Løve Selvik, are using the same technology to find mushrooms in the woods - with a mobile app.

The app is currently only an idea, described in their project report from last fall.

“This is a cool application, and the technology they describe is realistic. But the challenge is to find mushrooms that are partially hidden under grass and other vegetation,” says Arnt-Børre Salberg, a senior research scientist at the Norwegian Computing Center.

“If the program is designed to distinguish different species, it will also require zero tolerance for poisonous mushrooms. That’s tough to achieve,” he says.

More than fantasy

Mushrooming is an industry with a long tradition in many parts of the world, Jensen and Selvik write in their report. They think their system could be used to identify locations with a lot of mushrooms before heading out into the field, for example using autonomous drones.

Jensen’s and Selvik’s idea isn’t just a pipedream. They’ve analyzed the idea in technical detail. The report provides an overview of how computers have learned to interpret images.

Take an ordinary chanterelle. How does the computer program discern — whether on one’s mobile or a PC — a mushroom from a yellow leaf?

Broadly speaking, the answer lies in two methods: an older one, Support Vector Machines (SVM); and a new one, deep neural networks. The report describes both.

Image cropping

In greatly simplified terms, we can imagine SVM this way: we put a yellow leaf beside a chanterelle in the moss. What does SVM do?

At first nothing, it turns out. The image is too cluttered for SVM to make sense of it, with moss and pine needles and ants scattered about. The image needs to be simplified.

Image cropping comes to the rescue. It manages to outline the chanterelle and the leaf, ignoring all the rest of the forest floor around them. The cropping tool, called scale-invariant feature transform (SIFT) in techspeak, is a simplified collection of points that describes the approximate shape of the chanterelle or leaf.

As the name suggests, this feature description is independent of size. A small and a large chanterelle yield the same drawing, if the points have the same form.

Hundreds of dimensions

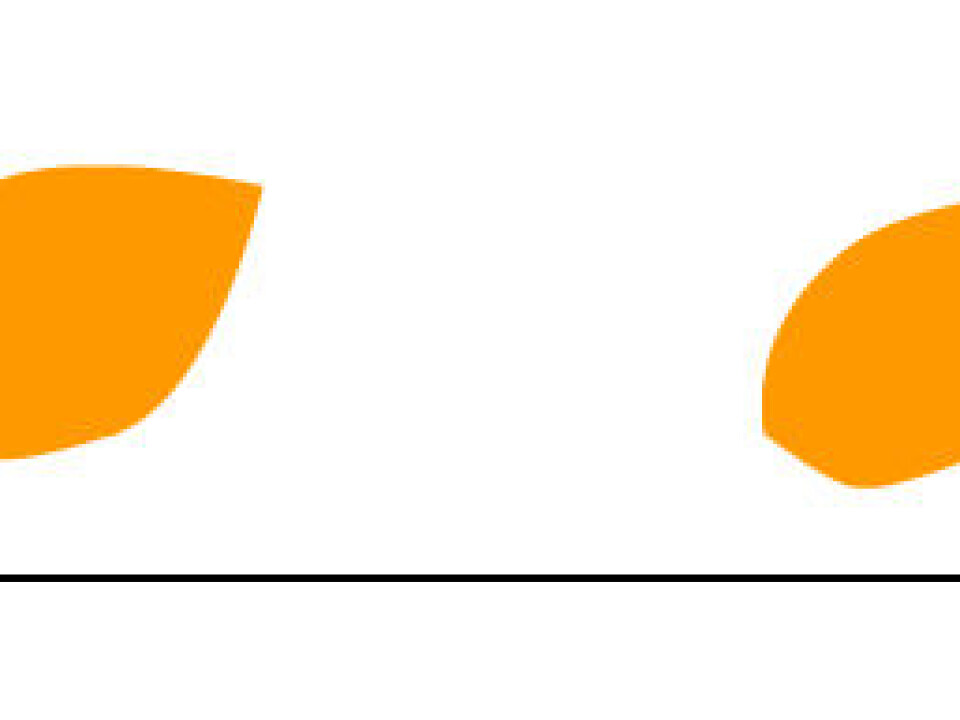

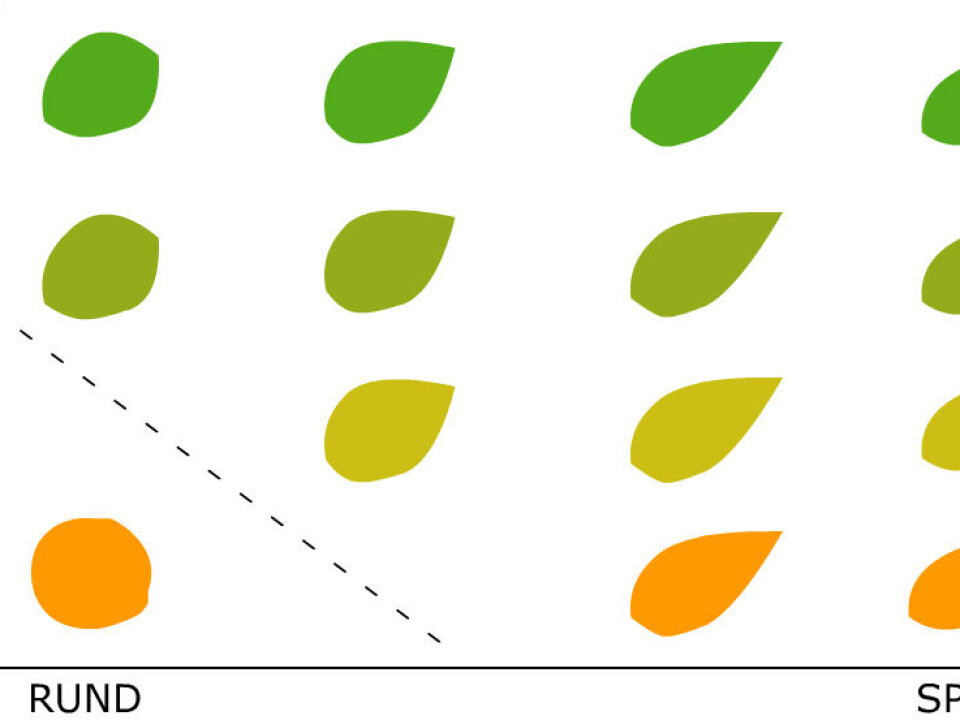

Now SVM has something it can work with. Suppose the chanterelle is round and the leaf is pointed. They have two different forms. Round shapes fall at one end of the scale, and pointed shapes at the other.

SVM is ready to take action. It creates a dividing line on the scale. On the left end is the mushroom, on the right the leaf. All set, right?

Well, not always. Some leaves happen to be round, so maybe we can’t just look at the shape. How about looking at the colour, too?

Jensen and Selvik add a vertical line to the scale. Green is at the top, yellow at the bottom. Then they go out and take pictures of chanterelles and leaves in the moss.

Each leaf - or mushroom - gets a place on the chart based on its shape and colour. A green leaf goes top left. A pointed yellow leaf sits bottom right.

Now SVM can distinguish the boundary as a diagonal line. The chanterelle still glows from the bottom left - yellow and round.

But what about round yellow leaves? Hmmm. SVM runs into problems because SIFT isn’t describing the data adequately. It appears we need to distinguish more than just shape and colour.

We could look for the serrated outline of the leaves against the undulating outline of the chanterelle, or look for the veins in the leaf, for example.

SVM is able to work with hundreds of different properties simultaneously, such as seeing highly dimensional feature spaces and drawing boundaries to separate the highly dimensional points of chanterelles from the highly dimensional leaf points.

Patterned on brain cells

Then came deep neural networks in 2012 and a new way to interpret images.

Actually, the method itself wasn’t new. What was new was that computers had become fast enough so neural networks could be operated without the microprocessor speed turning to molasses.

The way neural networks work is analogous to the human brain, making it easier to understand why they require a lot of computing power.

“The advantage of deep neural networks is that they can describe the images better than SIFT can,” says Salberg.

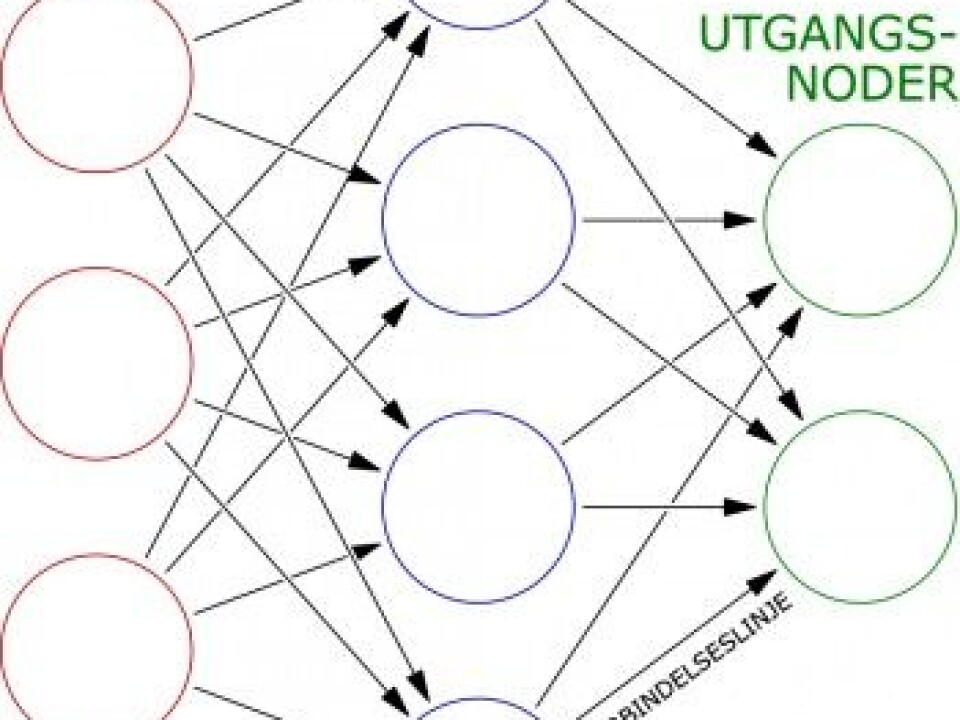

Deep neural networks mimic our brain cells, and are connected by nodes. Each node is connected to other nodes, just as brain cells connect to other neurons through nerves.

When the brain learns something new, some connections between these billions of nerve fibres become stronger and others become weaker. This is how our brain recognizes and impresses patterns. Similarly, neural networks “learn” when the nodes’ connecting lines become stronger or weaker.

But some differences exist, too. In artificial neural networks, the nodes and connecting lines have simple numerical values, whereas nerve signals in the brain are much more complicated.

Another difference is that a neural network sends signals only one way. Data - like the mushroom images - first go to the input nodes.

Then the data are sent on through the connecting lines and multiple hidden node layers. The results - or interpreted images - are output nodes.

But how does a neural network learn to see the difference between a mushroom and a leaf?

Training examples

To instruct your computer, you need loads of pre-processed images that the computer can learn from. The images have to be carefully described so the software can know exactly what it’s searching for in a given image.

The advantage of this method is that the machine learns quickly. The disadvantage is that you have to come up with so many prepared images. Where can they be found?

Jensen and Selvik suggest two ways to obtain them. Image databases like ImageNet, which organizes images according to a linguistic hierarchy, are one source.

Their second and more original suggestion is to use computer games. Computer games often take place in huge worlds with landscapes that lend themselves well to including images of mushrooms.

This can quickly generate a huge quantity of indexed training images. You provide images for the neural network to interpret, and then you give it a pat on the back every time it finds a mushroom.

Initially, the program frequently misses the mark, but little by little the neural network undergoes a learning process.

Backpropagation

When the program hits the mark, the network makes tiny changes backwards from the output nodes via the intermediate nodes to the input nodes - through the connecting lines, those artificial nerve fibres between the artificial brain cells.

This is called backpropagation. After lots of this trial and error and correction, the neural network gradually evolves into a process that works.

Does this mean you could send your computer into the deep dark woods and let it gather its own knowledge?

“No, we're not there yet, but that's where we want to go. It’s called unsupervised learning,” says Salberg.

The method has its pros and cons. The upside is that you don’t need a lot of pre-processed images. You can let the neural network puzzle out the raw reality.

The downside is that this kind of learning requires more computing power. But given Moore's law that states that computing power will double every two years, that may not be an issue.

Offline in the mushroom woods

At this point, Jensen and Selvik are passing the baton on to other mushroom lovers and data geeks. The program needs to be developed and trained on computers, before it can be downloaded as an app onto a mobile phone.

“The neural network training requires PCs with a lot of computing power, often with several large video cards in parallel,” says Salberg.

Once the neural network is trained, it needs much less computing power to interpret the images.

Salberg is optimistic that the app will be able to run on a mobile phone - even offline in the mushroom patch. Offline technology development is in the works.

Rapid development

Jensen and Selvik find reason to be confident, given the speed at which the technical development of mobiles is happening.

They believe that the greater accuracy of neural networks is usurping previous image recognition methods.

But until their app becomes reality, only one neural network is really good enough for dedicated mushroom hunters – your own noggin.

Reference:

Stian Jensen and Andreas Lion Selvik: Towards Real-Time Object Detection of Mushrooms, A Preliminary Review. Project report, NTNU, 2015.

--------------------------------------

Read the Norwegian version of this article at forskning.no