Opinion

Let’s not vilify our students over chatGPT

OPINION: With the advent of ever-more capable artificial intelligence (AI) tools such as chatGPT, we once again have an opportunity to choose a rational, humane future over clinging to an irrational, suspicious past.

«For us, it is important that we have common

guidelines for how to use AI to promote learning»

- Leader NSO (Norwegian

Students’ Organization), Maika Marie Godal Dam

The recent introduction of large language models (LLMs) like chatGPT has been met with confusion, excitement, and fear.

Much of this is linked to uncertainty regarding what exactly these tools can do, how useful or risky they are, how they may infringe on our privacy - whether they threaten norms in education, whether they will be banned or restricted, and if they will somehow overrule humans.

Learning to love the new Large Language Models?

In the miasma of confusion we as educators are facing regarding large language models, we can be fairly certain of one thing: these tools will be a part of our current students’ working lives.

Further, they will likely be a transformative part of their lives, in ways we cannot fully anticipate. Any conscientious educator will realize what this means: we must help our students navigate LLMs.

Or perhaps it is more accurate to say that we must help our students help us.

Us versus them?

Decades of educational research have indicated what educators should be doing, including co-constructing our curriculum and knowledge with our students (students as partners); reconsidering our assessments to be more 'authentic', with significance beyond the classroom; and anticipating change rather than shying away from it.

In the miasma of confusion we as educators are facing regarding large language models, we can be fairly certain of one thing: these tools will be a part of our current students’ working lives.

However, much of the recent dialogue surrounding chatGPT (and anticipating others such as Bard and Bing’s tool) has resulted in the following:

It has created an 'us versus them' dichotomy with our students, essentially vilifying those we are obliged to assist; and doubled-down on the unwarranted assumption that high-stakes, school exams are our best option for assessment.

However, we cannot reject this particular new technology and still somehow remain relevant in our spheres of influence.

Considering the student perspective

We recently conducted a survey of students and educators in the Faculty of Mathematics and Natural Sciences at the University of Bergen, essentially to understand how our colleagues and students are defining, using, and perceiving LLMs (specifically, chatGPT).

Key findings among students include a positive, but balanced view, about chatGPT. Students also reflect on how chatGPT will affect their learning. One student said:

"Can we cheat using chatGPT? Then maybe the assessment form needs to be adjusted?"

ChatGPT can be a useful tool that to a certain extent can be compared to a mathematical calculator. It streamlines parts of the work, but one still has to use it in the right way and understand the 'output'.

Another student commented:

"Before the end of the 2020's, … what previously was a literature search will become a conversation with a virtual assistant that can provide sources etc. Every student will have an AI research assistant, and they will spend a greater amount of their time thinking about higher level tasks."

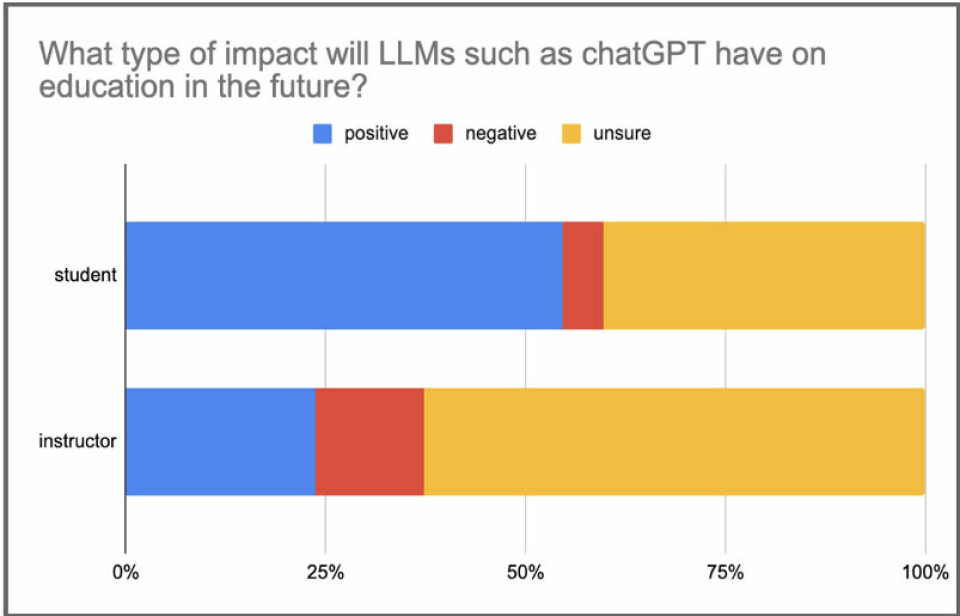

Further, students generally have a more positive attitude about the future of chatGPT than do educators (see figure 1), while their responses also show various misunderstandings about the capabilities and limitations of LLM usage, including a reliance on factual accuracy.

This gap between reality and perceptions should suggest to instructors an opportunity for in-class discussions and activities involving LLMs ('is the generated response accurate? If not, why not? How can we best use these generated responses?').

So, how can we embrace these new LLMs in

education?

Here we offer some suggestions for constructively working with LLMs in our courses and teaching activities.

First, we can help our students (and ourselves) understand what these LLMs are. LLMs are pattern-matching algorithms based on probabilities, not humans in silico . Their responses address the task of 'what would an answer look like', not 'what is the answer'.

We can encourage students to use these tools in our classroom activities, and then lead a discussion on the information that was provided, its reliability and accuracy, and whether they can identify likely sources of information.

One of us developed an in-class activity in which students use chatGPT to figure out how to do simple bioinformatics tasks like DNA sequence retrieval and analysis.

Asking the students afterwards about the usefullness of such an approach, most respondents (78 per cent) said it was very useful, followed by 17 per cent rating it as moderately useful, and none saying it was not useful at all.

In sum, students seem eager to use these tools constructively, and we have an opportunity to model for them cautious and critical exploration.

Helping to prepare our students for an uncertain future is an educator’s job

Second, we should partner with our students. Our students have valuable perspectives, but also look to us for guidance, and want to be prepared for the future workforce, in or out of academia.

Further, a significant and growing body of literature on 'students as partners' indicates that this approach leads to enhanced learning, higher engagement in class, and positive interactions between students and teachers (Bovill, 2020; Kaur and Noman, 2020; Glessmer and Daae 2022).

A fairly simple pathway to entry would be to solicit two or three student ambassadors from each course that we teach (Cook-Sather et al., 2019). These individuals can facilitate communication between students and instructors, help develop classroom activities, and represent their peers in providing consistent feedback on the course.

The value of this type of student feedback will be especially potent as new tools, with new features, are introduced and incorporated into their (and our) daily lives.

Critically, student partnerships help avoid the 'us versus them' mentality that characterizes much of the current dialogue around LLMs in education.

An opportunity for better assessments

Third, we should reconsider our assessments. The dominant assessment model in Norwegian higher education is an end-of-term, high-stakes test that we know is pedagogically regressive.

These exams do an excellent job of telling us which of our students is a skilled test-taker, but they certainly aren’t good for overall motivation or learning, and they may exacerbate existing inequities in education (Högberg and Horn 2022, Salehi et al., 2019).

Much of the dialogue around 'dealing with' LLMs focuses on how we can retain these exams in light of anticipated, pervasive cheating. But are we assessing for learning—using assessments to support student self-correction and deep learning? And are our assessments authentic—do our students create products (e.g., portfolios) that have relevance beyond the classroom (Harlap et al 2022)?

If we can create assessments informed by principles of constructive alignment and authenticity, and if we can communicate this rationale to our students, it should reduce the need to obsess so much over potential cheating.

Students who want to learn—and who seek end-of-term deliverables to share with potential employers—will have more internal motivation to do well on these assessments. And some students will cheat. Nothing has actually changed.

We have had opportunities in the past to choose student-centered, evidence-based instruction that embraces the affordances of modern technology (e.g., the development of remote instruction during covid). Some of us failed that test.

With the advent of ever-more capable AI tools such as chatGPT, we once again have an opportunity to choose a rational, humane future over clinging to an irrational, suspicious past. And it might be fun!

References

- Bovill, C. 2020. Higher Education 79(6):1,023–1,037, https://doi.org/10.1007/s10734-019-00453-w

- Cook-Sather, A., Bahti, M., & Ntem, A. (2019). Elon University Center for Engaged Learning.

- Glessmer, M.S., and K. Daae. 2021. Oceanography 35(1):81–83, https://doi.org/10.5670/oceanog.2021.405

- Harlap, Y., Jørgensen, C., & Cotner, S. (2022). Nordic Journal of STEM Education, 6(1).

- Högberg, B., & Horn, D. (2022). European Sociological Review, 38(6), 975-987.

- Kaur, A., and M. Noman. 2020. Journal of University Teaching & Learning Practice 17(1), https://doi.org/10.53761/1.17.1.8

- Salehi, S., Cotner, S., Azarin, S. M., Carlson, E. E., Driessen, M., Ferry, V. E., ... & Ballen, C. J. (2019, September). In Frontiers in Education (Vol. 4, p. 107). Frontiers Media SA.

FURTHER READING:

Share your science or have an opinion in the Researchers' zone

The ScienceNorway Researchers' zone consists of opinions, blogs and popular science pieces written by researchers and scientists from or based in Norway. Want to contribute? Send us an email!